Commercializing Artificial Intelligence Platforms

There are many companies that require Semantic Graphs.

Introduction

Commercializing Artificial Intelligence platforms, products and tools is much more challenging than traditional software. This is because,

1. It represents a revolution in computing

2. Its new to IT staff, conceptually and in practice

3. It requires adoption of a substantially different way of thinking about computing

4. It requires a different way of thinking about business.

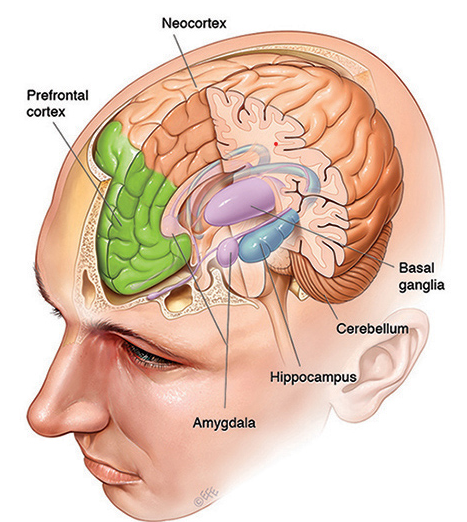

Human Intelligence

In the U.S., every year, about 2.6 million people have some type of brain injury, caused by trauma, stroke, tumor, or other illnesses. The greatest factor in functional recovery after brain injury comes from the brain’s ability to learn, called neuroplasticity.

After injury, neuroplasticity allows intact areas of the brain to adapt and attempt to compensate for damaged parts of the brain. Developing brains are more able to regenerate than adult brains.

It’s possible that one day we will discover ways in which to restore cognitive function to higher levels than we can today, maybe to regain full function.

Understanding that there are levels of cognition in human brains informs an understanding of the development of artificial cognitive ability.

Today, we are starting to build elementary cognitive functionality in computers, albeit only equivalent to that of a months-old infant. Computers lack imagination, emotion, and creativity, but they are now capable of learning and reasoning logically.

The advantages over humans that a computer displaying “artificial intelligence” has is that

1. an average desktop computer can ‘speed-read’ text at 400 times the speed of a human

2. computers can store or access massive amounts of data that they never forget

3. they can process information at astonishing speed.

For those reasons, artificial intelligence complements humans as a tool, rather than cognitively outperforming them.

In recent years we have seen outrageous hyping of artificial intelligence, its poor cousin twice removed Machine Learning, and various types of graph database.

As we start to utilize artificial intelligence to solve major challenges such as those posed by viral pandemics, we will find that the technology we need for artificial intelligence has been right in front of us for twenty years and has been ignored in the worst failure of technology leadership since the computer age began. It has been left to small companies to redress this.

The History of Artificial Intelligence.

The story of Artificial Intelligence started 64 years ago, in the 1956 Dartmouth Summer Research Project on Artificial Intelligence. It was organised by John McCarthy, then a mathematics professor at the College.

In his proposal, he stated that the conference was “to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.”

The idea of building an intelligent computer was met with one disappointment after another; so much so that regular setbacks led to periodic “AI winters” of despair.

In 1998 there was a breakthrough. Ten years earlier, a recent Oxford University graduate, Tim Berners-Lee, had invented what became known as the World Wide Web, what most of us refer to as “the Internet”.

In 1998 he proposed an incomplete architecture for a web of intelligent computers communicating with each other across the Internet. He called it a “Semantic Web” .

He proposed that the computers would be “intelligent” because they would understand the meaning of data that they exchanged without human programming; they would understand the “semantics” of their messages so that they could communicate intelligently, a little like humans do, at a basic but effective level.

What Berners-Lee “dreamed” of required a significant departure from conventional computing, a different way of structuring data to engineer it into information that a computer could understand by itself, without the need for computer instructions provided by humans. It was a breakthrough in the then forty-year-old computer science field of “Knowledge Engineering”.

We now accept that a computer cannot be intelligent if it doesn’t have knowledge that it can understand by itself; no knowledge = no intelligence.

The twenty years after the Berners-Lee proposal.

Between 2003 and 2012, the Computer Engineering school at Oxford University pursued the Berners-Lee vision, applying it to medical research. Had that leadership been followed by others, we may have avoided the latest pandemic of virus diseases, COVID-19, and it’s multi-trillion dollar cost. We might have cured some cancers.

In 2012 we embarked on research and development of the Berners-Lee proposal for Knowledge Engineering.

Simultaneously, from around the same time, attempts were made by other companies to build alternative approaches to the Berners-Lee proposal. These approaches were premised on the argument that what Berners-Lee proposed was “too complex” , that customers would not be able to transition to a new paradigm in computing based on a revisionist view of how data should be structured.

Meanwhile IBM had partly embraced the Berners-Lee proposal. In 2014 they announced an Artificial Intelligence platform that they called “Watson”, one that embodied enhancements to the proposed Berners-Lee architecture.

The hyping of alternative approaches to Artificial Intelligence continued on for a couple more years fed on exuberant funding by Venture Capital firms, mainly in China and the US.

In 2020 the Berners-Lee proposal has been reborn.

The alternative approaches to the Berners-Lee proposal for Knowledge Engineering have met with increasing skepticism; not suitable for anything other than simple applications, and sometimes referred to as “training wheels”.

In 2020 we are seeing acceptance from leading industry analysts that the semantic computing models proposed by Berners-Lee are central to “robust and automated Artificial Intelligence”.

“Semantic Computing technology is used to digitally represent knowledge for AI systems to understand their world in context, using semantic “graphs”, ontologies, metadata and rules. Semantic technology can represent relationships between data and ties together different pieces of data because of like attributes (including hidden relationships).” Michele Goetz, Forrester Research, 2017.

That inherent ability to find “hidden relationships” in massive amounts of data just might deliver the clues we need to attack serious diseases (that have far reaching economic consequences) ranging from the common cold to viral pandemics and cancers.

“To take the next leap in technology advancement will require mature software, capable of rapidly ingesting, understanding, analyzing, and presenting interpretable results; this is where ontologies come in. In my opinion, ontologies will be the enabler of the next generation of disruptive technologies.”

The COVID-19 pandemic has put sudden pressure on medical researchers that now require the support of strong artificial intelligence systems. It needs the step-up in computer-aided research that is the very same technology proposed by Berners-Lee in 1998, one that enables a semantic web of medical researchers.

Coincidentally, leading artificial intelligence practitioners are introducing the same concepts into enterprise data challenges, companies and government agencies that have complex data needs that require robust and automated artificial intelligence solutions.

The State-of-the-Art in Artificial Intelligence platforms.

The large software companies have failed medical research as small innovating companies have taken the lead. Companies like ours have identifiable characteristics.

We have:

- Deep level expertise in data modelling techniques

- Spent at least five years researching the Berners-Lee technology proposals and committed to a single focus on their implementation in Artificial Intelligence solutions

- Dispensed with non-compliant or hybrid models for Knowledge Engineering

- Built some useful tools for managing the data models so that sophistication is appreciated, rather than complexity feared

- Invented complementary semantic techniques and sought patent protection of them.

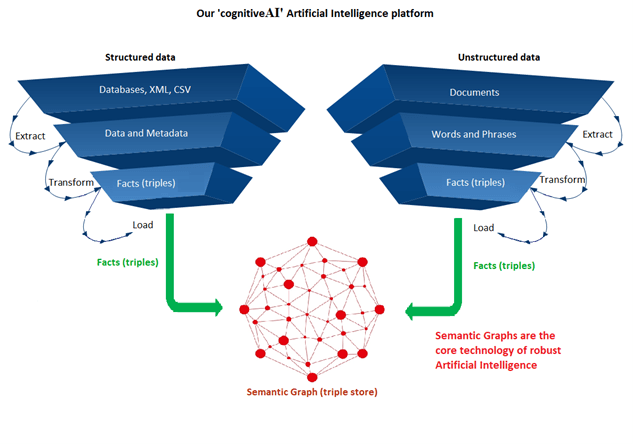

The level of intelligence we can deliver to a computer with the Berners-Lee semantic computing architecture is the highest level available today. It is enabled by the building of a Semantic Graph, a sophisticated repository of knowledge that a computer can understand by itself, the best we’ve got when it comes to artificial intelligence, indeed the only implementation for robust and automated artificial intelligence.

There are alternatives to Semantic Graphs known as RDF Graphs and Property Graphs, and blends of the two. It is important for potential customers to understand the differences.

1. Semantic Graphs utilize the RDF data model for representing facts. They utilize the Web Ontology Language, “OWL”, data model for representing knowledge. Facts and knowledge are represented in the Semantic Graph (often called a Knowledge Graph) and held in a Semantic Graph database (often called a native “triple store”). They are open systems enabling the highest level of connectivity for sharing data and information in collaborations suitable for medical research, known as linked open data.

2. RDF Graphs utilize the RDF data model for representing facts. They utilize an inferior model, or no model, for representing Knowledge. They may be hybrid graphs using an inferior “workaround” approach to “supporting” RDF and OWL knowledge models. They can be open systems for sharing data, but not knowledge.

3. Property Graphs also utilize inferior knowledge representation models to achieve “ease of use”. They are generally proprietary technologies that lock users in, making escape to a superior graph more difficult and expensive. They often claim to be suitable for Artificial Intelligence; they are not. Management and maintenance of these graphs can become a significant burden and a dead-end street. They are closed systems.

Commercial Progress

In the past twelve months, we have transitioned from being an R&D company into a Sales and Marketing company with a strong, world leading intellectual property profile.

We offer an Artificial Intelligence platform with a Semantic Graph and tools to load the graph with facts and knowledge, and to manage it. We can train the platform to populate the Semantic Graph with knowledge extracted from documents using advanced Machine Learning.

The major challenge of the transition to commercialization is to be able to communicate the benefits of deep technology without getting tangled up in the technical detail. The historically surprising lack of leadership from the software industry leaders means that our company often needs to explain what the many misunderstood technologies are good at, and not good at.

The decision makers for Artificial Intelligence projects that are likely to succeed, the C-level executives, need to know how it will affect their bottom line and how much it will cost, not how it works. We need to know how it works so that we can confidently advise customers on digital strategies that will avoid disappointments, e.g. start small, go fast.

There are around thirty companies that offer various graph database that are not suitable for Artificial Intelligence. They are being treated with increased skepticism and caution.

“Most of our clients start directly with Knowledge Graphs, but we recognize that that isn’t the only path. Our contention is that a bit of strategic planning up front, outlining where this is likely to lead gives you a lot more runway. You may choose to do your first graph project using a property graph, but we suspect that sooner or later you will want to get beyond the first few projects and will want to adopt an RDF / Semantic Knowledge Graph based system.”

There are many companies that require Semantic Graphs.

These companies are characterized by core business systems storing data in “silos” that make it very difficult to get a single view of a business, what Banks call the “single view of the customer”.

CSL chief executive officer Paul Perreault has hardly had a misstep in his six and a half years in the top job, but the leader of the $148 billion blood products giant says the company has more work to do on its digital strategy.

“When I think about the general business, we do a lot of things extremely well, but one of the things we need to continue to look at is our digital strategy”, he said. ”. (Australian Financial Review, 13 February 2020.)

We can transition a customer into a robust and automated Artificial Intelligence strategy.

The disasters that have befallen Australian Banks in recent years highlight the lack of insight companies can have into their own businesses. These are companies that are a critical pillar of the Australian economy but running blind as their Chairpersons, Managing Directors and others fall on their sword for their company’s accidental malfeasance.

It now seems certain that recognition of the need for distributed Semantic Graphs will be sharply accelerated in 2020 and 2021. The ill-understood COVID-19 and its potential to either continue its terror or give birth to a more frightening descendant in the future will put heavy focus on finding stronger Artificial Intelligence than what a decade of hype has delivered.

For Medical Research and other mission critical applications of Artificial Intelligence we need industrial strength technologies that can be trusted to deliver the highest levels of data integrity and accuracy possible. Those technologies must be the 100% pure techniques proposed by Berners-Lee and developed by W3C over 20 years of standards-setting working groups.

There is no role for here for toys, training wheels, or hype.

Our task is to help that happen by demonstrating the power of Artificial Intelligence in highly complex data environments, in Banks, in Medical Research, in Pharmaceutical companies, in industrial environments.

We must engage with the leaders in these industries, the visionaries.